Note about the notes

© 2023. This document is licensed under the Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

These notes have been written, edited and published by Oscar Luis Vera-Pérez, using AsciiDoc, AsciiDoctor 2.0.18 and Github Pages. They should be considered as a permanent work in progress. Any constructive feedback is welcome. All errors are ky own.

The initial content of this document was inspired from the (inherited and redefined) conferences taught by Jean-Marc Jézéquel, Yves Le Traon, Benoit Baudry and Benoit Combemale at the University of Rennes. The topics presented in this document have been expanded with content derived from other sources such as the "Introduction to Software Testing" book written by Paul Ammann and Jeff Offutt, "The Fuzzing Book" by Andreas Zeller, Rahul Gopinath, Marcel Böhme, Gordon Fraser, and Christian Holler and the online material on GUI testing published by Tanja Vos. Many other bibliographical sources and materials were also used and appear in the References section. All images from external sources retain their respective copyrights.

As part of the course, students have to complete a set of exercises for each topic. These exercises can be consulted in the following links:

1. Introduction

Software has become omnipresent in our daily lives. Some people even dare to say that “software is eating the world” (1). Nowadays, we depend more and more on the Internet and the Web which are powered by complex interconnected software systems. We trust software to predict the weather, to guard our bank accounts and to order pizza. Through software we communicate with other people and share our photos. We also use streaming services to watch films and listen to music.

Due to its widespread presence there are consequences when software is not properly developed. In some cases, a software glitch has no other repercussion than a hilarious "blue screen of death" in an advertising screen. Sadly, software issues may also cost important amounts of money, they may undermine the reputation of companies and, in some extreme cases, they might even cost human lives.

As developers we need to be sure that the software we build works as expected. This course will briefly introduce some of the most relevant techniques and tools to achieve that goal.

1.1. Bugs, faults, errors and failures

Along with the proliferation of software, the term bug is nowadays known to most people. We say that a software or program has a bug when its behavior produces unexpected results. Bugs may manifest in very different ways, for example, a web page we visit returns an error message instead of the desired content or a character does not jump when we press the right button while playing a video game.

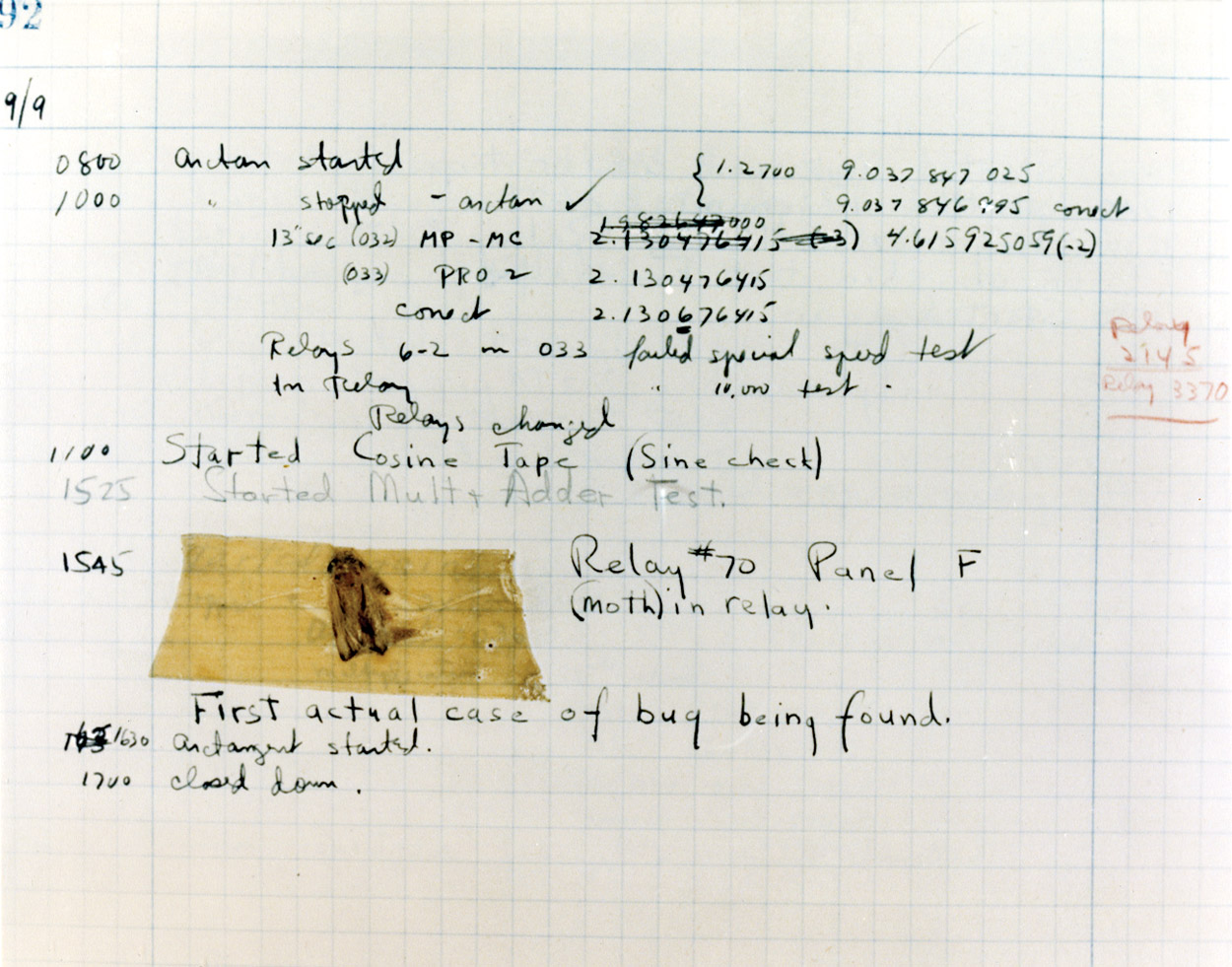

The term bug in Computer Science and Software Engineering has been usually attributed to Grace Hooper. She was involved in the programming and operation of the Mark II, an electromechanical computer of 23 tons and the size of a room. She was also the creator of the first compiler and the only woman to be ranked admiral in the U.S. Navy. Her group traced a computer malfunction to a moth in one of the relays of the machine. This is the first documented case of a computer "bug". The moth was included in one of the notebooks that this group used to log the computer’s operation. It can be seen nowadays at the Smithsonian Institution’s National Museum of American History in Washington D.C Figure 1. However, some historical records state that Thomas Alva Edison already used the term for something similar in his inventions.(2).

Software development usually follows a set of requirements called the product specification. In whatever format it is presented, the specification should describe the features the software will have (functional requirements): e.g.: the program should open a document, save it, the user should be able to change the format and so on. Specifications also include the constraints under which the software should work (non-functional requirement): e.g.: the program should be secure, easy to use and fast (5).

A bug appears when the software does not match the requirements. Maybe the program does not perform correctly a functional requirement e.g. the window closes when the maximize button is pressed or part of a document is not correctly formatted. Also, bugs can make a program violate non-functional requirement e.g. there is a lag between the moment the user types something and the moment the text appears on the screen.

Bugs or faults are produced by human mistakes in the development process. A fault induces a wrong state or error during the execution of the software. When the error produces an incorrect behavior of the program we see a failure.

- Software Fault, Software Defect, Bug

-

Is a manifestation of a human mistake in software. It is a static flaw or imperfection found within the code of a program.

- Software Error

-

An incorrect internal state of the program during execution produced by a fault.

- Software Failure

-

It is a deviation of the software from the expected delivery or service.

A mistake leads to a fault, a fault leads to a software error which leads to a failure when the software is executed. A failure is the manifestation of a bug.

Listing 1 shows a recursive implementation of a binary search over an ordered array. Given an ordered array and a number, the search method returns the position of the number in the array or -1. The private overload of the method uses two auxiliary parameters to delimit the slice of the array that is inspected on each call. The initial slice is the entire array. When the method is invoked, it compares the element in the middle of the slice with the number given as input. If they are equal, then the position in the middle is returned, otherwise the method is invoked with the first half or the second half of the slice. The procedure continues until the number is found in the array, or when there is evidence that the number is not contained, under the assumption that the array is initially sorted.

public static int search(int[] array, int element) {

return search(array, element, 0, array.length - 1);

}

private static int search(int[] array, int element, int first, int last) {

if(last < first) {

return -1;

}

int middle = (first + last)/2;

int median = array[middle];

if(element == median) {

return middle;

}

if(element < median) {

return search(array, element, first, middle - 1);

}

return search(array, element, middle, last); (1)

}| 1 | This line contains a fault. The correct code should invoke search with middle + 1 instead of middle. |

This implementation has a fault. The second recursive invocation of search: search(array, element, middle, last) should use middle + 1 instead of middle. This fault does not always produce an error. For example, search(new int[]{0, 6, 8, 10}, 6) produces the right result. However, search(new []{0, 6, 8, 10}, 10), leads to an invocation where first=2 and last=3. For this invocation middle, which is computed as (first + last) / 2 results in middle=2. Once the comparisons are done, the same method is invoked again with the same parameters. This is not the right program state and thus it becomes the error produced by the initial fault. As the method is invoked again with the same parameters, it produces an infinite recursion that is manifested through a stack overflow exception. The exception becomes the failure that evidences the initial fault.

Failures may come from all sorts of errors. Some of them could be traced to a very localized and specific part of the code, as in the example before. These are considered as local bugs. Others may be encountered at the interaction of different components of the system. These are considered as global bugs. The following sections describe some famous local and global bugs.

1.1.1. Local bugs and some famous cases

Local bugs can be traced to very specific locations in the code. They can be originated from multiple types of errors such as:

-

Omission of potential cases e.g. not considering that negative numbers could be used in certain operations.

-

Lacks of checks e.g. the code does not verify whether a given parameter is

nullor that divisor must a number different from zero. -

Wrong conditions e.g. using the wrong comparison operator when comparing two numbers.

-

Wrong approximations e.g. wrong values due to type conversions when we convert a

doubleto anintand loose precision.

In the next sections we present some famous local bugs and briefly inspect their causes.

The case of Zune

Zune 30, was released to the public in November 2006. It was the first portable media player released by Microsoft. Suddenly, on December 31st 2008, all Zune devices hung and stopped working. The problem was traced back to a piece of code in the firmware equivalent to Listing 2.

while (days > 365) {

if (IsLeapYear(year)) { (1)

if (days > 366) { (2)

days -= 366; (3)

year += 1; (4)

}

}

else {

days -= 365;

year += 1;

}

}| 1 | On December 31st, 2008 the value of year was 2008 and the value of days was 366 so isLeapYear(year) evaluated to true. |

| 2 | Since the value of days was 366 days > 366 evaluated to false. This is the fault, it should have been >=. |

| 3 | This is not executed, therefore the value of days does not change. |

| 4 | This is not executed, therefore the value of year does not change. |

The values of days and years did not change which produced a wrong internal state and thus the error. The software enters an infinite loop and the devices became non-responsive.

By the next day, days would be 367 and the code would run perfectly. So Zune devices stop working on December 31st of every leap year. The problem could have been found if the code was tested with the right input date before releasing the product.

Heartbleed

Heartbleed is a software vulnerability disclosed in April 2014 that granted attackers access to sensitive information. It was caused by a flaw in OpenSSL, an open source code library implementing the Transport Layer Security and Secure Sockets Layer protocols.

As part of these protocols, a computer should send a heartbeat, an encrypted message that the receiver should replay back, to keep the connection alive. The heartbeat contains information about its own length. The code for the receiver never verified that the message had the specified length. To answer, it should allocate a memory buffer to store the content of the heartbeat. If the message was longer, then there is a buffer overflow and the computer would send more data than requested (7).

In Listing 3 you can see a fragment of the code containing the bug.

...

n2s(p, payload); (1)

...

buffer = OPENSSL_malloc(1 + 2 + payload + padding); (2)

bp = buffer;

...

memcpy(bp, pl, payload); (3)

...

s->msg_callback(1, s->version, TLS1_RT_HEARTBEAT, (4)

buffer, 3 + payload + padding,

s, s->msg_callback_arg);| 1 | Read payload length into payload. |

| 2 | Allocate memory using the value from the received message. |

| 3 | Copy the payload and potentially sensitive extra information as payload might be larger than what is really required. |

| 4 | Send the data back. |

Other interesting examples

The USS Yorktown (CG-48) cruiser was selected in 1996 as the testbed for the Smart Ship program. The ship was equipped with a network of several 200 MHz Pentium processors. The computers abroad the ship ran Windows NT 4.0 and executed applications to run the control center, monitor the engines and navigate the ship. In September 21st 1997 a crew member entered a zero into a database field causing a division by zero that resulted in a buffer overflow, which, in turn, made the propulsion system fail. The ship was dead for several hours and had to be towed back to port (8).

The Patriot missile defense system was able to track the trajectory of enemy projectiles and intercept them. The system stored the clock time in an integer that was converted to a fixed point number and multiplied by 1/10 to produce the time in seconds for the tracking estimation. The computation was performed in a 24-bit fixed point register and the time value was truncated. This would produce an error proportional to the uptime of the system (i.e. it grows in time). Apart from that, the system was updated several times to improve the conversion routine, but the patch was not placed in all the required code locations. On February 25th, 1991 one of these Patriot batteries failed to intercept an Iraqi Scud missile. The battery had been up for 100 hours and the chopping error was around 0.34 seconds. Since a Scud travels at 1.676 m/s it reaches more than a half kilometer in this time. The Scud struck an American Army barracks killing 28 soldiers and injuring around 100 other people (9).

The Chemical Bank deducted by error about $15 million from more than 100000 customers in one night. The problem was caused by a line of code that should not be executed until further changes were made to the system. This line sent a copy of every ATM transaction to the machine processing paper checks so, all transactions were deducted twice (10).

1.1.2. Global bugs and famous cases

Rather than coming from a specific and localized error, some failures may emerge from the interactions of the modules that compose a system. This evidences that the whole is more than the mere sum of its parts.

Some sources of global bugs could be:

-

Wrong assumptions about third party components.

-

Errors in the reuse of code. For example, using the code in an environment or an architecture for which it was not designed.

-

Concurrency bugs, that lead to race conditions and deadlocks by incorrectly assuming certain order of execution.

-

Improbable or unforeseen interactions between hardware, software and users.

Race conditions and the Northeast blackout of 2003

A race condition appears when the output of a system depends on the sequence or timing of other uncontrollable events. This may lead to a bug when the effects of this assumption are not carefully considered. For example, in a multithreaded application, a piece of code may be (wrongly) assumed to run before another.

The code in Listing 4 shows a simplified example of a race condition.

public class SimpleApplet extends Applet {

Image art;

public void init() { (1)

art = getImage(getDocumentBase(), getParameter("img"));

}

public void paint(Graphics g) { (2)

g.drawImage(art, 0, 0, this); (3)

}

}| 1 | init initializes art, if it is not invoked, then art is null. |

| 2 | paint could be invoked before invoking init. |

| 3 | If paint is invoked before init art is null which produces an error in this line. |

To prevent this race condition, the code of paint should not assume that art will always point to an instance. To deal with this race condition it is enough to check if art is null or not.

On August 14th, 2003 the alarm of FirstEnergy, an electric utility in Akron, Ohio, should have alerted about an overload in the electricity transmission lines. A race condition stalled the alarm and the primary sever went down. A backup server started processing all demands and also went down after 13 minutes. With both servers down, the information being shown in the screens passed from a refresh rate of 1 to 3 seconds to 59 seconds. The operators were not aware of the actual condition of the grid and the system collapsed affecting an estimated of 50 million people.

| You may find an image circulating the Internet that is supposed to show a satellite view of this blackout. The image is in fact fake or was perhaps edited to show the extension of the affected area. |

Ariane 5

The Ariane 5 test launch is one of the most referenced examples of the impact that a software bug can have. On June 4th 1996, the rocket was launched by the European Space Agency from the French Guiana. After 40 seconds the rocket exploded at more than 3700 meters of altitude.

In (11) the authors explain that, before liftoff, certain computations are performed to align the Inertial Reference System (SRI). These computations should cease at -9 seconds from the launching sequence. But, since there is a chance that a countdown could be put on hold and because resetting the SRI could take several hours, it was preferable to let the computation proceed than to stop it. The SRI continues for 50 seconds after the start of flight mode. Once the rocket is in the air this computation is not used anymore. However, it caused an exception which was not caught and produced the explosion of the rocket.

Part of the software of Ariane 5 was reused from Ariane 4. The later used 16-bit floating point numbers, while the former used 64-bit arithmetic. The conversion of a greater value caused the exception. The fact that this module used 16-bit floating point numbers was not documented in the code. The trajectory of Ariane 5 differed from that of Ariane 4. The former had considerably higher horizontal velocities that produced values above the initial range. This was the first launch after a decade of development with an estimated cost of US$7 billion plus the rocket and cargo were estimated in US$500 million.

The Mars Climate Orbiter

The Mars Climate Orbiter probe crashed when entering the orbit of Mars. The cause was tracked to the fact that one development team was using the International System of Units (meters and kilometers) while another team was using the Imperial Unit System (miles and yards). The loss was estimated in US$235.9 million (12). The subject is still inspiration of many memes cruel jokes.

1.2. Why is it so hard to build correct software?

Software inevitably fails. The causes for this are widely varied as we have seen from the previous examples. No domain related to software escapes from this fact. A failure can have multiple consequences from banal to terrible. But why is it to hard to build correct software?

First of all, programs are very complex artifacts, even those we may consider simple or trivial.

Consider the code presented in Listing 5.

void alert(int n) {

countdown(n);

soundAlarm();

}

void countdown(int n) {

while(n > 1) {

if (n % 2 == 0)

n = n /2;

else

n = 3 * n + 1;

}

}Is it possible to show that the alarm will sound for every value of n?

For this particular example one could attempt to devise a formal proof. But good luck with that! Mathematicians have been trying to do it since 1937 with no success. countdown is, in fact, an implementation of what is known as the Collatz conjecture.

One could also try to verify the program for every possible input, but this is impossible in the general case.

For this particular example, let us assume that n is a 32-bits unsigned integer, then we have 232 possible inputs, that is 4294967296 cases for a very simple code of barely 7 lines of code. If the computation of every input takes on average 2.78e-06 seconds, then we will spend 3 hours finding out the result, if the function stops for every input. 3 hours for barely 7 lines of code!

Determining if a procedure halts when given a specific input is known as the Halting Problem (14). The general case of this problem is undecidable. This means that, in general, we can not known for a given procedure if it will halt when processing a given input.

Let’s prove it. Suppose that it is possible to write a function halts that tells whether a given function f halts when given an input x. That is, halts returns true if f(x) halts (Listing 6) and false otherwise.

f and an input x for f, returns true if the invocation of f(x) halts.function halts(f, x):

...If the halts function exists, then we can create a procedure, confused, that will loop forever if halts returns true (Listing 7).

halts(f, f) is true, otherwise it does halt.function confused(f) {

if (halts(f, f)) (1)

while (true) {}

else

return false;

}If we try to compute confused(confused), halts(f, f) is equivalent to halts(confused, confused). If this evaluates to true, then it means that confused(consfused) halts, but then the procedure enters in an infinite loop and so, in fact, confused(confused), which is what we are evaluating in the first time, does not halt. On the other hand, if the condition is false, it means that confused(confused) does not halt, but then, the procedure halts.

Therefore, confused(confused) halts if and only if confused(confused) does not halt, which is a contradiction, so halts can not exist. This means that, in the general case, we can not prove that a program will halt when processing a given input. Of course, there are specific cases in which this is possible, but it can not be done for all existing procedures.

Proving the correctness of a program is a very difficult task. There are formal methods to try to achieve this, but they rely on mathematical models of the real world that might make unrealistic assumptions and, as abstractions, are different from the real machines in which programs execute.

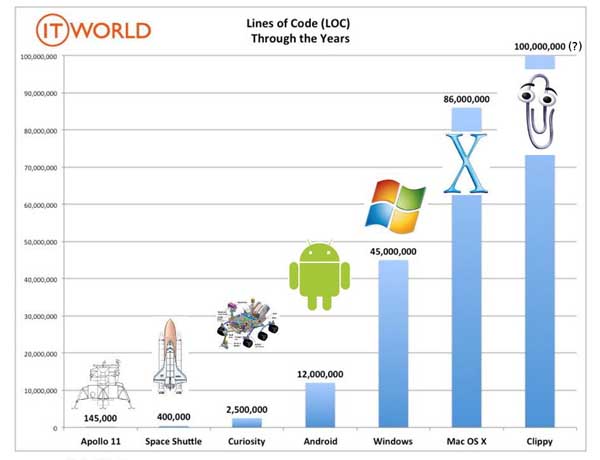

Software is, of course, much more complex than the small functions we have seen so far. As an example, notice that the number of lines of code has increased exponentially in time (though not always in sync with the complexity of the task that the program should achieve), just take a look at the following comparison:

The software of the Apollo 11 Guidance Computer had 145,000 lines of code, while NASA’s Curiosity rover was programmed with 2.5M lines of code. The infamous Clippy on the other hand, had more than 100M lines of code.

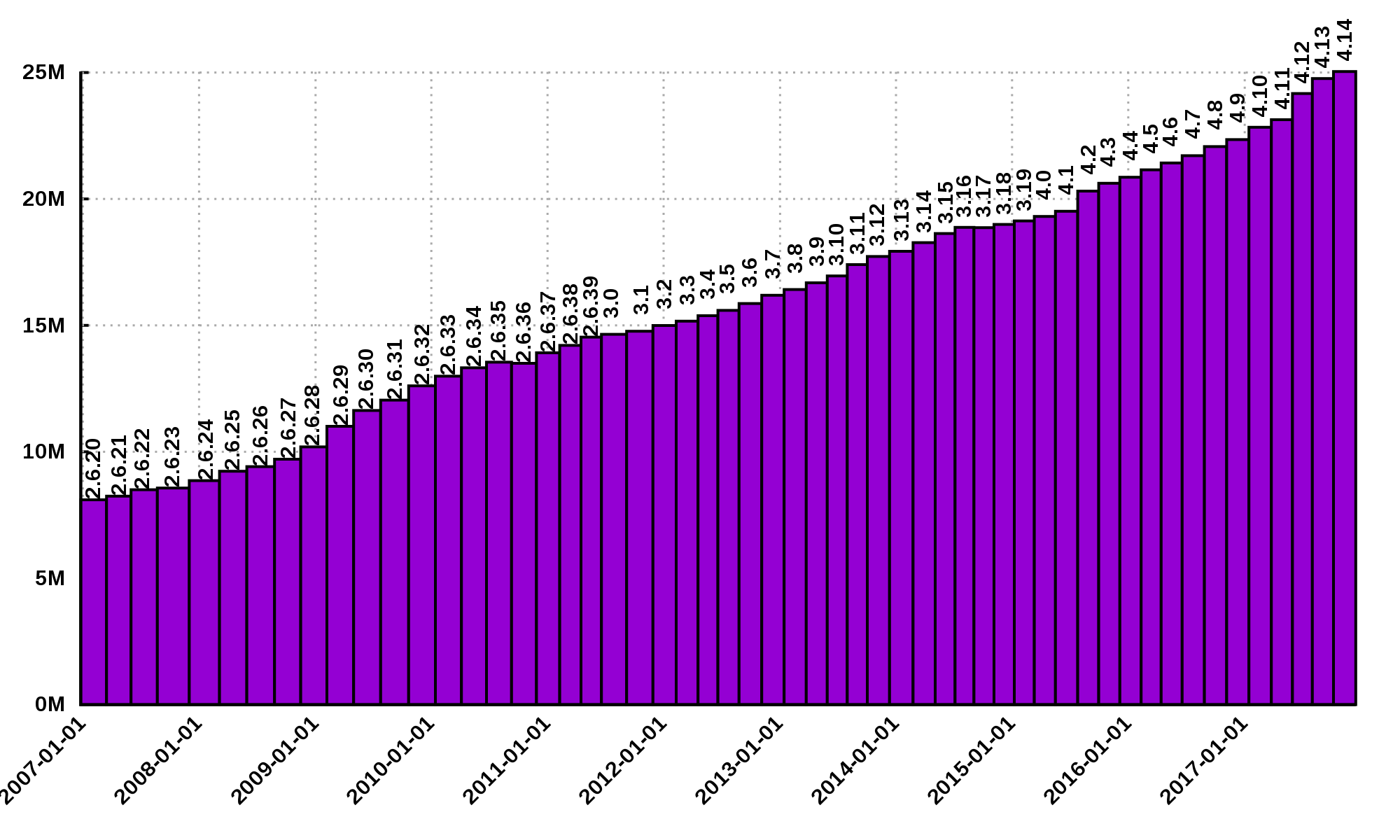

Projects such as the Linux Kernel, have triplicated their size in 10 years:

Firefox contains more than 36M lines of code and Chromium more than 18M. More statistics can be found here.

The complexity of software does not come only from its size. For example, in both, Firefox and Chromium developers use more than 15 different programming languages at the same time.

Open source software also grows in complexity as the number of contributors increases. The Firefox project, for example, have had 6477 contributors and 996214 commits as of February 2018.

Also, most software is expected to run in multiple execution platforms, (including hardware, operating system, …). Probably the most dramatic scenario in this sense comes from the mobile world. By August 2015 the OpenSignal company reported the existence of 24,093 different Android devices from 1294 distinct brands (16). Android applications are expected to run correctly in all of them.

Software is also present in systems with real-time computing constraints and sometimes implementing critical functionalities. For example, mp3 players, microwave ovens, GPS devices, medical equipment for vital sign monitoring, avionics (inertial guiding systems), automobiles, fire security systems and the list may go on. As a side note, a car nowadays contains more than 100M lines of code (mostly devoted to the entertainment system), and hundreds of electronic control units (ECU).

On top of that, software is not a static artifact that we release in production and leave as it is. It needs to be maintained over time. For example, Windows 95, was released to manufacturing on August 15th, 1995, it latest release was published on November 26th 1997. However, its mainstream support ended on December 31st, 2000 while the extended support ended on December 31st, 2001, that is five and six years after its latest release. On its side, Windows 7 was released to manufacturing in July 22nd, 2009, support ended on January 14th, 2020 and the extended support for professional users should end on January 10th 2023 while most of us are not using it nowadays.

The COBOL language appeared in 1959. It was estimated that, in 1997, around 80% of business transactions ran in COBOL. Even today, it is even said that more than 220 billions lines of COBOL are in use (17). Migrating these legacy systems may be risky. In 2012 the Commonwealth Bank of Australia replaced its core banking platform to modernize their systems. The change ended up costing around 750 million dollars, which is why many banks have opted to keep their COBOL systems working. Today there are 75-, 60-years-old consultants providing support for COBOL systems in banks (18). In the recent Covid-19 crisis, the state of New Jersey in the U. S. requested COBOL programmers to deal with the 40-years old system to handle the huge amount of unemployment claims they received (19).

The software development process itself could be sometimes rather complex. There are many methodologies about how to build software, and they could even change during the creation of a new product.

So, the complexity of software may come from its requirements, its size as it can be huge, the number of technologies involved on its development as tens of languages and frameworks can be used at the same time, the number of people working on its implementation that could even be hundreds, the diversity of platforms in which it must run and even the development process.

1.3. How to build reliable software?

This is a difficult question and there is no easy answer. Systematically validating and verifying software as it is being built and maintained can lead to fewer bugs. Verification is the process in which we answer Are building the product right?, that is if the software conforms to its specification. Validation answers Are we building the right product?. In this sense we check that the implemented product meets the expectation of the user i.e., whether the specification captures the customer’s needs.

There are three main general approaches to construct reliable software:

- Fault-tolerance

-

Admits the presence of errors and enhance the software with fault-tolerance mechanisms.

- Constructive approach

-

Involves formal modeling. It guarantees the reliability and correctness by construction.

- Analytical approach

-

Involves techniques to analyze the program in order to detect and fix errors.

1.3.1. Fault-tolerance

This approach assumes that it is impossible to prevent the occurrence of bugs in production. So, it enhances the system with mechanisms to deal with them.

N-version programming is an example of this approach. With an initial and rigorous specification, two or more versions of the same system are developed by different development teams (usually with different backgrounds, and using different tools and methods to build the system). In production, these versions are executed in parallel. The actual output of the entire system is an agreement of the results obtained from all versions.

Another example is Chaos engineering popularized by Netflix with its Simian Army. The main concept is to perform a controlled experiment in production to study how the entire system behaves under unexpected conditions. For example, in Netflix, they would simulate random server shutdowns to see how the system responds to this phenomenon (24). This is a form of testing in production. Main challenges are to design the experiments in a way that the system does not actually fail and to pick the system properties to observe. In the case of Netflix, they want to preserve the availability of the content even when the quality has to be reduced.

Finally, approximate computing techniques (20) can be also applied to deal with a trade-off between accuracy and performance in a changing environment (e.g., time-varying bandwidth), when a good enough result is better than nothing (21). For example, Loop Perforation (22), which transforms loops to perform fewer iterations than the original loop, is used to keep the system running in a degraded environment, with a good enough result (e.g., dynamically adapting the video quality according to the actual bandwidth).

1.3.2. Constructive approach

This approach tries to guarantee the absence of bugs by construction. It involves the manual or automatic formal proof of all the components of the system, and their final integration. It is usually based on logical modeling and reasoning and it is used on specific parts of critical software.

The constructive approach may use tools such as Coq, a system to express assertions and mechanically check formal proofs or Isabelle an interactive theorem prover. Listing 8 shows how to use Coq to proof that the depth of any interior node in a tree is greater than 0.

Module TreeExample.

Inductive tree : Type := (1)

| Leaf : tree

| Node : tree -> tree -> tree

.

Check Node.

Definition small_tree : tree := (2)

Node (Node Leaf Leaf) Leaf.

(* small_tree tree looks like:

x

/ \

x x

/ \

x x

*)

Definition is_leaf (t : tree) : bool := (3)

match t with

| Leaf => true

| Node x y => false

end.

Fixpoint depth (t : tree) : nat := (4)

match t with

| Leaf => 0

| Node l r => S (max (depth l) (depth r)) (* Succesor of the *)

end.

Lemma depth_positive : (5)

forall t : tree, 0 < depth t \/ is_leaf t = true.

Proof.

induction t.

{

cbv [depth is_leaf]. (6)

right. (7)

reflexivity. (8)

}

{

cbn [depth is_leaf]. (9)

left. (10)

lia. (11)

}

Qed.| 1 | Definition of a tree type. |

| 2 | Creating an instance of tree with three leaves and two intermediate nodes. |

| 3 | Defining is_leaf which tells whether the given tree is a leaf or not. |

| 4 | Defining a function to compute the depth of a leaf. |

| 5 | Defining a lemma stating that the depth of a tree is positive when the tree is not a leaf. |

| 6 | Inline definitions for depth and is_leaf. |

| 7 | Set the right part of the disjunction as goal for the proof. |

| 8 | The right part is true. This proves true = true. |

| 9 | Inline again, but do not overwrite depth and is_leaf. This avoids recursive calls to depth. |

| 10 | Set the left part of the disjunction as the goal. |

| 11 | According to depth, the node can not be a leaf. So the second part of the depth definition is used. This is the inductive step. The successor of a natural number is always greater than 0. |

The Coq system helps mechanizing the proof of lemmas and theorems by identifying the facts that can be used to achieve the proof and the formulas that still need to be proven.

Coq is also able to extract executable programs from definitions and theorems. There are additional extensions and tools to apply this methodology to other programming languages.

CompCert is the first formally verified C compiler, but it is not bug-free even when a lot of effort has been invested into its formal verification. As said before, the main problem with formal proofs comes from the assumptions they make to abstract the real world. The following quote explains the reason behind a bug found in CompCert:

The problem is that the 16-bit displacement field is overflowed. CompCert’s PPC semantics failed to specify a constraint on the width of this immediate value, on the assumption that the assembler would catch out-of-range values. In fact, this is what happened. We also found a handful of crash errors in CompCert.

Constructive approaches may also involve a form of model checking. These approaches represent the system as a formal behavioral model, usually transition systems or automata. The verification of these models is made with an exhaustive search on the entire state space. The specification of these models are written with the help of logic formalisms. The exhaustive search is directed to verify properties the system must have, for example, the absence of deadlocks. Model checking is used in hardware and software verification and, in most cases, they are performed at the system level. They find application in defense, nuclear plants and transportation.

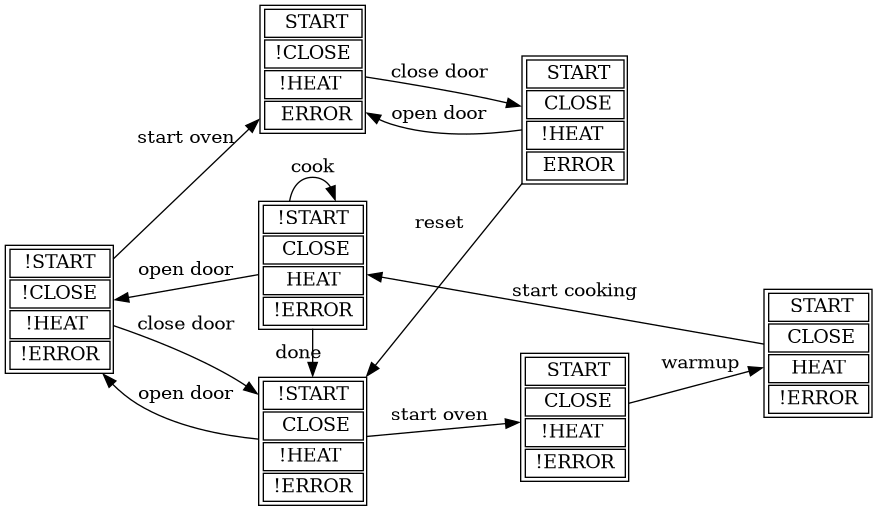

The following diagram shows a model of the functioning of a microwave oven as a Kripke structure. (Adapted from https://www.dsi.unive.it/~avp/14_AVP_2013.pdf). The model includes first order propositions that characterize the states of the system and a transitional relationship between the states.

These models can be used to generate concrete code that, for example, would be embedded in specific hardware, and it is possible to verify the state of the system at random inputs and even prove or falsify properties, e.g. for every input the heat is not on while the door is open.

1.3.3. Analytical approach

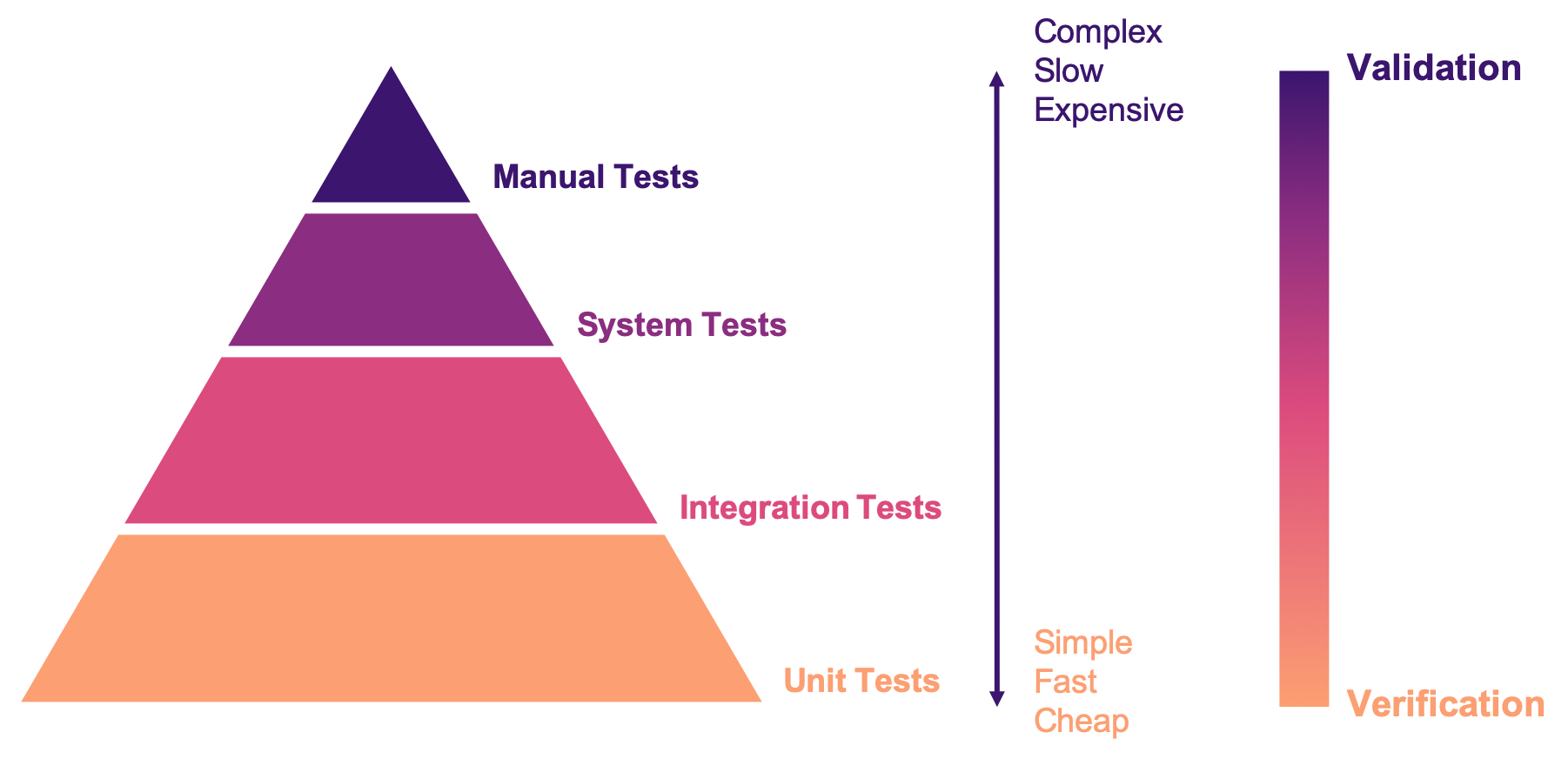

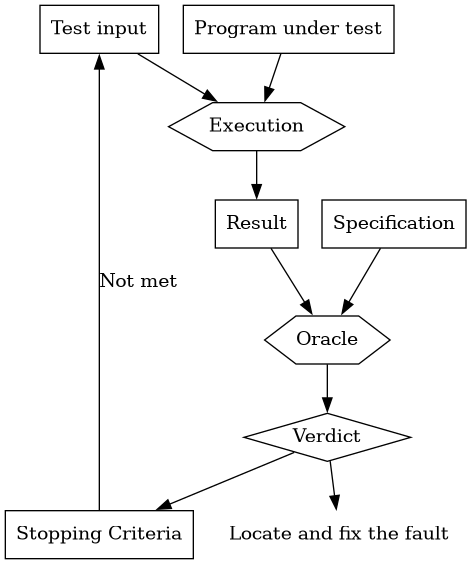

This approach is directed to find the presence of bugs in the system. It is regularly based on heuristics and can target all kinds of software artifacts: code, models, requirements, etc. Its more used variant is software testing which evaluates a program by observing its execution under different conditions [ammann2017introduction]. Testing presents, nowadays, the best trade-off between effort and result when it comes to the verification and validation of a software product. It will be the main focus of this course.

Bertrand Meyer proposes seven principles of testing (23):

- Principle 1: To test a program is to try to make it fail

-

This is the main purpose of testing, to find defects in the code. In the words of Meyer the single goal of testing is to uncover faults by triggering failures. Testing can not be used to show the absence of bugs, as Dijkstra said and Meyer recalls. But it is extremely useful in finding those scenarios in which the software does not behave as intended. This definition of Meyer presents testing as a dynamic technique, that is, testing requires the execution of a program. However, there are some static code analysis techniques and tools that help detecting potential faults by finding well known code patterns that are prone to errors, or that ensure code quality by forcing development guidelines. In the long term these techniques help reducing the occurrence of bugs at a lower cost, since they don’t execute the program. Some authors refer to these analyses as static testing. There is controversy on whether these static analyses are in fact testing or not, but since they are highly valuable for the quality of the software we shall discuss them in the course.

- Principle 2: Tests are no substitute for specifications

-

Tests are built from specific cases, instances of the different scenarios in which the software shall execute. The specification is composed of more general abstractions tied to human understanding. While the specification can be used to derive test cases the opposite is not necessarily true. Even in large numbers, a finite amount of tests may not capture the general properties of the system due to missing instances.

- Principle 3: Any failed execution must yield a test case, to remain a permanent part of the project’s test suite

-

Once a fault has been discovered there is always the peril that it can reappear later. It happens often in practice. Uncovered faults should then become test cases that prevent these regressions. This is known as regression testing.

- Principle 4: Determining the success or failure of tests must be an automatic process

-

Once a test is executed, one needs to know if the software behaved as expected. Thus, we need a test oracle to produce such verdict. As the number of test cases grows, this task must be automated. It does not scale to run hundreds of test cases, print the output of the program and then manually check whether the output is correct.

- Principle 5: An effective testing process must include both manually and automatically produced test cases

-

Manually produced test cases come from the understanding developers have about the problem domain and the input, or from Principle 3, as Meyer explains. But often corner and specific cases escape from human intuition. Complementing manually designed test cases with automatically produced test cases can help spot what developers missed. Computers are able to generate test cases to a level that humans can not reach and help explore unforeseen scenarios.

- Principle 6: Evaluate any testing strategy through objective assessment using explicit criteria in a reproducible testing process

-

Any testing strategy must be assessed empirically. No matter how sophisticated a testing technique can be, it is of no use if it can not discover faults. Meyer recalls that simple techniques such as random testing are proven to be quite efficient. Then there is the question on how to evaluate the effectiveness of our testing strategy.

- Principle 7: A testing strategy’s most important property is the number of faults it uncovers as a function of time

-

Code coverage, that is, the parts of the code executed in the test cases is often used to evaluate the quality of tests. However, this is only useful to spot the parts of the code that aren’t yet tested, not how well the executed parts are verified. So, coverage is not, in general, a measure of the quality of the tests. The assessment of the tests should correspond to their ability to detect bugs. In this principle Meyer includes time. Of course, the faster faults are encountered, the better.

This set of principles is not comprehensive and not all authors and practitioners agree with all aspects of their formulations. However, in our opinion, they reveal the essence of testing.

| Meyer’s article Seven Principles of Software Testing provoked an answer from Gerald D. Everett, a testing expert and also author of books on the topic. The answer qualified Meyer’s principles as insufficient since they don’t encompass other software quality aspects. The discussion went on with more answers and short essays from both authors. The entire discussion is worth the reading. More details and pointers can be found in Meyer’s own blog: https://bertrandmeyer.com/2009/08/12/what-is-the-purpose-of-testing/. Needless to say, we agree with Meyer’s point of view. |

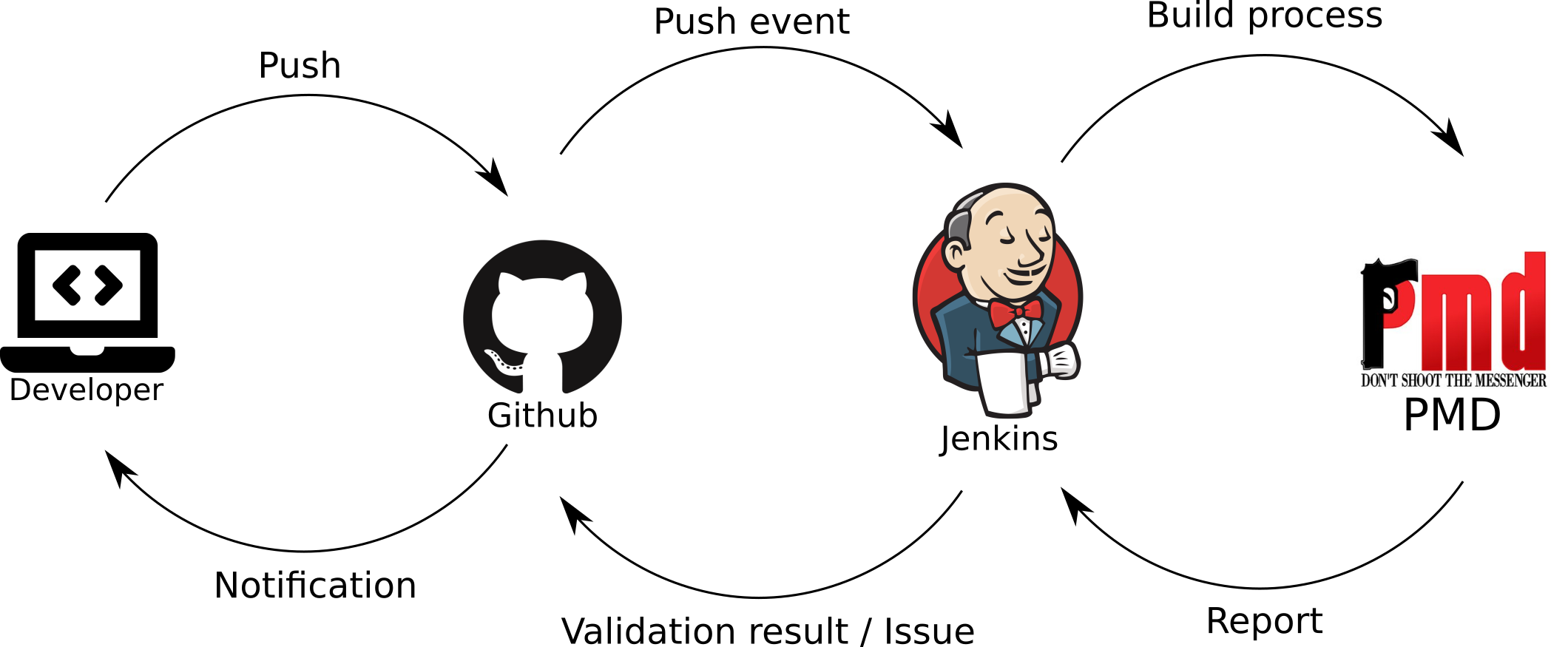

1.3.4. Modern practices: CI/CD and DevOps

Nowadays testing is automated as much as possible. Software developers use automated processes to facilitate the integration of the work done separately by team members, detect errors as fast as possible and automate most tedious and error-prone tasks.

Continuous Integration (CI) is one of those practices. It is a process in which developers frequently integrate their code into a single shared source control repository. After a change is pushed to a central repository, an automated pipeline is triggered to build and verify the application after the incorporation of the new change. (25) (26)

According to Martin Fowler:

Continuous Integration doesn’t get rid of bugs, but it does make them dramatically easier to find and remove.

Chief Scientist ThoughtWorks

The frequent integration of each developer’s work facilitate the early detection of errors as opposed to each developer working on isolation and then spending a lot of time dealing with the combination of their individual efforts. Most software companies these days use a form of CI and the most used source control hosting services such as Github, Gitlab and Bitbucket encourage these practices by making it easy to incorporate CI tools and even providing their own CI automation alternatives.

According to Thoughtworks, (26) CI processes are supported by the following practices:

- Maintenance of a single source repository

-

All team members should merge their changes into a global/unique code repository, hosted in a source control hosting service, either in-premises or using a public service like Github. The source control repository plays an important role in the identification of a change and the detection of conflicts between simultaneous changes. The common practice nowadays is to use distributed source control systems like Git of Mercurial in opposition to the previous centralized systems like CVS or SVN. Even when the source control system is distributed, that is, every developer has a copy of the repository, the CI process should monitor one central repository to which all developers should push their changes. This does not exclude the creation of mirror repositories.

- Automate the build

-

Once a developer pushes her changes into the global repository, a CI server checks out the changes and triggers a build process. This build process is expected to be self-testing, that is, as part of the automated build, tests should be executed to verify the changes in the code. These tests should also be executed in an environment as close as possible to the production conditions. The build is also expected to be fast so developers have a quick feedback on the change they integrated and the outcome of the build process should be accessible to all team members so they know the current state of the project.

CI processes also impose responsibilities to developers as they are expected to push changes frequently. Also changes should be tested before integrating them into the global repository. Also, developers should not push any change while the automated build fails, that is, when a previous change produced a failure in the CI build process either compiling or running the tests. When a build fails it should be fixed as fast as possible to ensure the quality of the integrated code in the global repository.

CI processes are often accompanied by Continuous Delivery and Continuous Deployment processes.

Continuous Delivery is an automated process involving a verification pipeline whose outcome determines if a change is ready to be deployed. It may involve a larger build process than the one of the CI, including acceptance tests, which are tests in direct correlation to the requirements or the user’s needs, tests in several environment conditions, such as different operating systems, and it may even include manual testing. Once a change passes the delivery pipeline it is considered as robust enough to be deployed.

On its side, Continuous Deployment is an automated process to set artifacts produced and verified by successful builds into production. Continuous Deployment requires Continuous Delivery. Both enable frequent product releases. Some companies may release their products in a daily or even an hourly basis.

CI/CD approaches find great realization in DevOps. DevOps is a modern development culture in which team members of all roles commit to the quality of the final product and not just divide themselves into silos like the "development team" or "operation team". Automation is at the core of DevOps as every development phase is backed by automated processes and state-of-the-art tools. In DevOps, all phases: plan, code, build, test, release, deploy, operate, monitor are imbricated in an infinite loop (Figure 6) and the outcome of one phase impacts the other. For example, crashes observed in production by monitoring the system, automatically become an issue for developers and are incorporated to the set of tests.

2. Code quality and static analysis

The goal of any software project is to deliver a product of the highest possible quality, or at least it should be. Good software has scarce bugs. However, the notion of quality also characterizes how the product is being built and structured. Observing these aspects should help, in the end, to avoid the introduction of bugs. Diomidis Spinellis in his book Code Quality: The Open Source Perspective (26) presents four views of software quality:

- Quality in use

-

This view is centered in the user experience (UX), that is, how users perceive the software. It is the extent to which users can achieve their goals in a particular environment. It does not care about how the product is built. If a word processor makes it hard to edit a document, then it does not serve it purposes.

- External quality attributes

-

These attributes manifest themselves in the execution of the program. They characterize how well the product conforms to the specification. Observed failures signal the presence of bugs. Crashes, efficiency problems and alike degrade the external quality of the software. External quality attributes directly affect the quality in use.

- Internal quality attributes

-

They characterize the internal structure of the product. According to Martin Fowler, (27), internal software quality is impacted by how easy it is to understand the code. This, in turn, impacts how easy it is to maintain the product, add new features, improve and detect errors. Internal quality has a great impact in the external quality of the software.

- Process quality

-

It is a direct expression of how the software product was built. It is affected by the methodologies, practices, tools and frameworks used to develop the software. A good development process favors actions that improve the internal quality of the product, and minimize the amount of accidental complexity which is introduced into engineering solutions due to mismatches between a problem and the technology used to represent the problem.

In the end, all views of quality are deeply interconnected. The process quality impacts the internal quality. The internal quality affects in turn the external quality which directly affects the user experience.

Several models of software quality attributes have been proposed and standardized throughout the years (28), (29). However they all insist, as the Consortium for IT Software Quality (CISQ) summarizes, that high quality software products must be: reliable (low risk level in use and low likelihood of potential failures), efficient, secure, and maintainable.

Martin Fowler (27) explains that, as time goes by and a project becomes more complex, it is harder to add new features. At this point, even small changes require programmers to understand large areas of code. Paying attention to internal quality is crucial to further develop the product. Fowler calls cruft those deficiencies in internal quality that make it harder to modify and extend the system. Crufts are redundant, useless and dysfunctional pieces of code that become obstacles and increase maintenance costs. This is part of what is also known as Technical Debt.

External quality attributes can be observed and improved with the help of testing. Meanwhile internal quality attributes are observed by analyzing the code without executing it, that is, with the help of static code analysis. Some authors consider static analysis as a form of static testing. However, Meyer in its principles (23) excludes these techniques from the testing umbrella. Either way, static analysis helps to spot potential problems earlier and therefore impacts the testing process.

2.1. Code quality

Internal quality is assured by good code and good development practices. Good code is easy to understand and maintain. But, what may be easy to understand for one developer might be hard to understand for another, and even for the same developer two weeks after the code was written. Fortunately, the community has gathered and shared practices shown to work well, or not, according to experience.

2.1.1. Coding guidelines

Some good development practices are presented as coding guidelines or coding conventions. These are rules that specify how the code should be written so it can be understood by everyone (e.g., The Google Java Style Guide (1)). They may go from how to name a class or a method and how to handle exceptions to how and when to insert blank spaces in the code.

Coding guidelines may be specific to a programming language, to a particular framework, library, or tool, they may even be specific to a company or even a project. There are also guidelines general enough so they could be applied anywhere or guidelines that pursue an specific goal such as reducing security breaches in a program.

Naming conventions

There are only two hard things in Computer Science: cache invalidation and naming things.

Product Architect at Netscape

Part of the coding guidelines is devoted to help developers knowing how to name program elements such as classes or methods. These guidelines are different from one language to the other, although they share common ideas. This section contains three examples from Java, C# and Python.

The following Example 1 is an extract from the Java coding conventions (30). This fragment specifies how developers should name classes, interfaces and methods.

Class names should be nouns, in mixed case with the first letter of each internal word capitalized. Try to keep your class names simple and descriptive. Use whole words—avoid acronyms and abbreviations (unless the abbreviation is much more widely used than the long form, such as URL or HTML).

Interface names should be capitalized like class names.

Methods should be verbs, in mixed case with the first letter lowercase, with the first letter of each internal word capitalized.

public class ArrayList extends AbstractList implements RandomAccess {

public void ensureCapacity(int minCapacity) { ... }

}✔️ DO name classes and structs with nouns or noun phrases, using PascalCasing. This distinguishes type names from methods, which are named with verb phrases.

✔️ DO name interfaces with adjective phrases, or occasionally with nouns or noun phrases.

❌ DO NOT give class names a prefix (e.g., "C").

…

✔️ DO prefix interface names with the letter I, to indicate that the type is an interface.

✔️ DO ensure that the names differ only by the "I" prefix on the interface name when you are defining a class–interface pair where the class is a standard implementation of the interface.

There are similarities between the naming conventions for Java and C#. For example, class names should be noun phrases starting with a capital letter and using PascalCasing (Also called UpperCamelCase, DromedaryCase or CapWords). While method names in both languages should be verb phrases indicating an action, in Java developers use camelCasing. Notice that, in C#, interfaces should be prefixed with I but classes should not be prefixed with C. Listing 10 shows a code fragment matching these naming conventions. The I prefix helps to quickly differentiate between classes and interfaces.

public class ComplexNode : Node, IEnumerable

{

public void RemoveNode(Node node) { ... }

}Python developers use conventions to further differentiate method and functions from classes and user defined classes from built-in types (32). See Example 3.

Class names should normally use the CapWords convention. The naming convention for functions may be used instead in cases where the interface is documented and used primarily as a callable.

Note that there is a separate convention for builtin names: most builtin names are single words (or two words run together), with the CapWords convention used only for exception names and builtin constants.

Function names should be lowercase, with words separated by underscores as necessary to improve readability.

With this, one can easily infer that Node names a class, str is a built-in type and remove_node is a function.

Naming conventions are derived in most of the cases from the taste and practice of the community around a language or framework. In the end, these conventions help improving the readability of the code as developers can quickly understand the role of each element in a program.

Indentation

In most languages extra white spaces do not change the semantics of a program but they may play an important role in readability. For example, the indentation is useful to know the limit of methods, classes, nested instructions and any block in general. Each programming language tries to enforce an indentation style, but even for the same language different developers may follow different styles. Keeping a consistent style improves the understanding of a program.

Table 1 shows three examples of different indentation styles applied to the same fragment of code. Notice how different the program looks in each case.

Kernighan & Ritchie (K&R 1TBS) |

Allman |

Ratliff |

Haskell |

The Kernighan & Ritchie style, also known as “_the one true brace style_” and “Egyptian braces” was used in the influential book The C Programming Language written by Brian Kernighan and Dennis Ritchie (creator of C). Besides C, this style is also used in C++ and Java. C# however, uses the Allman style, in which the first brace is written in a separated line. The Allman style is also used in Pascal and SQL.

Wikipedia lists nine different indentation styles most of them with additional variants [wikipedia20202indentation].

Framework and company specific guidelines

Companies and even communities around a framework or project may impose specific guidelines to override or extend language conventions.

Sometimes these guidelines have a concrete goal other than readability. For instance, Example 4 shows an extract of the guidelines Microsoft enforces to write secure code using the .NET framework (34).

When designing and writing your code, you need to protect and limit the access that code has to resources, especially when using or invoking code of unknown origin. So, keep in mind the following techniques to ensure your code is secure:

-

Do not use Code Access Security (CAS).

-

Do not use partial trusted code.

-

Do not use the AllowPartiallyTrustedCaller attribute (APTCA).

-

Do not use .NET Remoting.

-

Do not use Distributed Component Object Model (DCOM).

-

Do not use binary formatters.

When a reference to a static class member must be qualified, it is qualified with that class’s name, not with a reference or expression of that class’s type.

Foo aFoo = ...;

Foo.aStaticMethod(); // good

aFoo.aStaticMethod(); // bad

somethingThatYieldsAFoo().aStaticMethod(); // very badShould conventions be always enforced?

Conventions are created to set a common ground for understanding. This is especially useful when we are learning a new language and to ease the collaboration between different developers in a project. However, there are cases in which strictly following these conventions actually has the opposite effect. For example, when dealing with legacy code that followed different guidelines, it is better to stick to the practices in place rather than introducing new conventions.

In any case, the ultimate goal must be to write consistent code that can be understood by all team/project members. Common sense is always the best guideline.

Example 6 explains how to name extending classes with respect to the base class, but it also warns against over-use (31).

✔️ CONSIDER ending the name of derived classes with the name of the base class.

This is very readable and explains the relationship clearly. Some examples of this in code are: ArgumentOutOfRangeException, which is a kind of Exception, and SerializableAttribute, which is a kind of Attribute. However, it is important to use reasonable judgment in applying this guideline; for example, the Button class is a kind of Control event, although Control doesn’t appear in its name.

Example 7 shows an extract from the Python coding guidelines stressing the idea that keeping consistency is more important than following the guidelines (32).

A style guide is about consistency. Consistency with this style guide is important. Consistency within a project is more important. Consistency within one module or function is the most important.

However, know when to be inconsistent — sometimes style guide recommendations just aren’t applicable. When in doubt, use your best judgment. Look at other examples and decide what looks best. And don’t hesitate to ask!

In particular: do not break backwards compatibility just to comply with this PEP!

2.1.2. Code Smells and AntiPatterns

Thorough the years, developers have identified patterns of code that usually become symptoms of hidden problems affecting the quality of the software. Such code patterns are known as Code Smells (also known as bad smells), a term coined by Kent Beck and first presented in Martin Fowler’s Refactoring book [fowler2006codesmells].

Code smells do not always lead to a problem or a bug. But, in most cases, their presence makes the code harder to understand and maintain, and in Fowler’s words “they are often an indicator of a problem rather than the problem themselves”. Code smells can be eliminated by refactoring, that is, restructuring the program to make it simpler.

The Source Making Blog presents a list of well known code smells and how they could be solved (37). Internet is full with such lists which might differ on the (generally catchy) name they use to categorize a smell and some might miss one or two patters.

The following is a small sample from that list.

- Long method

-

A method that contains too many lines of code or too many statements. Long methods tend to hide unwanted duplicated code and are harder to maintain. It can be solved by splitting the code in shorter methods easier to reuse, maintain and understand. Listing 11 shows a fragment taken from (39) of nearly 20 lines of code. It is already a big chunk of code, but it comes for a very large method of more than 350 lines. This is a clear, and rather extreme example of this code smell.

Listing 11. An already large fragment of code from a method of more than 350 lines. Taken from [glover2006monitoring]if (entityImplVO != null) { List actions = entityImplVO.getEntities(); if (actions == null) { actions = new ArrayList(); } Iterator enItr = actions.iterator(); while (enItr.hasNext()) { entityResultValueObject arVO = (entityResultValueObject) actionItr .next(); Float entityResult = arVO.getActionResultID(); if (assocPersonEventList.contains(actionResult)) { assocPersonFlag = true; } if (arVL.getByName( AppConstants.ENTITY_RESULT_DENIAL_OF_SERVICE) .getID().equals(entityResult)) { if (actionBasisId.equals(actionImplVO.getActionBasisID())) { assocFlag = true; } } if (arVL.getByName( AppConstants.ENTITY_RESULT_INVOL_SERVICE) .getID().equals(entityResult)) { if (!reasonId.equals(arVO.getStatusReasonID())) { assocFlag = true; } } } }else{ entityImplVO = oldEntityImplVO; } - Large class

-

A class containing too many methods, fields and lines of code. Large classes can be split into several classes and even into a hierarchy in which each smaller class has a very well defined purpose.

- Long parameter list

-

A method with a long list of parameters is harder to use. Parameters could be replaced by method calls or passing complete objects.

- Primitive obsession

-

Abuse of primitive types instead of creating one’s own abstractions.

- Temporary fields

-

Fields in classes that are used only under certain circumstances in one or very few methods, otherwise they are not used. These fields could be promoted most of the times to local variables.

- Feature envy

-

A method that accesses the data of another object more than its own data. This method’s behavior will probably be better placed in the class of the external object.

Code smells are very well localized program fragments. However, there are more global patterns that are often used as solutions to a problem but they may bring more harm than benefits and are better to avoid. These bad solutions are described as AntiPatterns. The same Source Making Blog provides an interesting list of AntiPatterns related to coding practices, software architecture designs and even related to the management of a project. Identifying these bad solutions helps also in finding a better alternative (38).

Here are some examples:

- Golden Hammer

-

Using a single tool to solve most problems even when it is not the best alternative. Leads to inferior performance and less suited solutions, requirements are accommodated more to match the tool than what users may need, design choices are dictated by the tool’s capabilities and new development relies heavily in the tool.

- Cut-And-Paste Programming

-

This one is self-descriptive: code is reused by copying and pasting fragments in different places. In the case that the originally copied code has a bug, then the issue will reoccur in all places where the code was pasted and it will be harder to solve.

- Swiss Army Knife

-

An excessively complex class interface attempting to provide a solution for all possible uses of the class. These classes include too many method signatures for a single class. It denotes an unclear abstraction or purpose.

- Design By Committee

-

A software design, usually from a committee, that is so complex and so full of different features and variants that it becomes impossible to complete in a reasonable lapse of time.

2.1.3. Code Metrics

Many code smells are vague in their formulation. For example: How can we tell that the code of a method or a class is too long? Or, how can we tell that two classes are too coupled together so their functionalities should be merged or rearranged? The automatic identification of such potential issues requires a concrete characterization of the method length or the coupling between classes. These characterizations are usually achieved with the help of code metrics.

Code metrics compute quantitative code features, for example, how many lines of code have been used to write a method, or how many instance variables does the method access or modify. Metrics can be used to assess the structural quality of the software. They provide an effective and customizable way to automate the detection of potential code issues. Computing the length of a method in terms of lines of code may help to detect those that are too big an could pose a maintenance problem. Obtaining such metrics is relatively easy an can be automated so it could be done when we compile or build our project. Once metrics are computed, we can set thresholds to identify parts of the code that could be problematic, as an example, we could consider as long any method with more than 20 lines of code. However not all projects or languages would consider the same threshold values: in some scenarios methods with more than 30 lines are perfectly valid and common.

This section presents some examples of the most common metrics used in practice.

Lines of Code

The simplest code metric is, maybe, the already mentioned number of Lines of Code (LoC) of a method.

| Sometimes code metrics are presented for operations instead of methods. Operations are indeed methods but the term is broader to escape from the Object-Oriented terminology and reach other programming paradigms. |

A long method is hard to understand, maintain and evolve. LoCs can be used to compare the length of the methods in a project and detect those that are too longer than a given threshold. However, this threshold depends on the development practices used for the project. The programming language as well as the frameworks and libraries supporting the code, have an impact on the length of the methods. For example, a small study made by Jon McLoone from Wolfram (40), observed in the Rosetta Code corpus of programs, that many of those written with Mathematica require less than a third of the length of the same tasks written in other languages.

Including blank lines or lines with comments in the metric could be misleading. Therefore, LoC is often referred as Physical Lines of Code while developers also measure Logical Lines of Code (LLoC) which counts the number of programming language statements in the method.

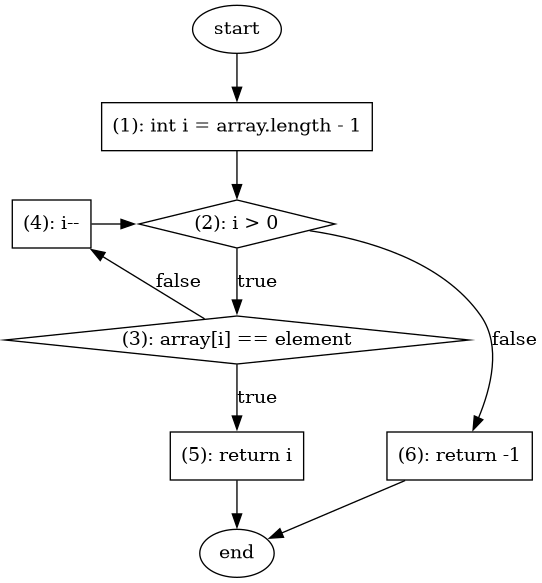

Cyclomatic Comprexity

A method with many branches and logical decisions is, in general, hard to understand. This affects the maintainability of the code. Back in 1976, Thomas J. McCabe proposed a metric to assess the complexity of a program (41). McCabe’s original idea was to approximate the complexity of a program by computing the cyclomatic number of its control flow graph. This is why the metric is also known as McCabe’s Cyclomatic Complexity. The goal of the metric was to provide a quantitative basis to determine whether a software module was hard to understand, maintain and test.

A sequence of code instructions, and by extension the body of a method, could be represented by a directed graph named control flow graph. The procedure is as follows:

-

Initially, the graph has two special nodes: the start node and the end node.

-

A sequence of instructions with no branches is called a basic block. Each basic block becomes a node in the graph.

-

Each branch in the code becomes an edge. The direction of edge coincides with the direction of the branch.

-

There is an edge from the start node to the node with the first instruction.

-

There is an edge from all nodes that could terminate the execution of the code, to the end node.

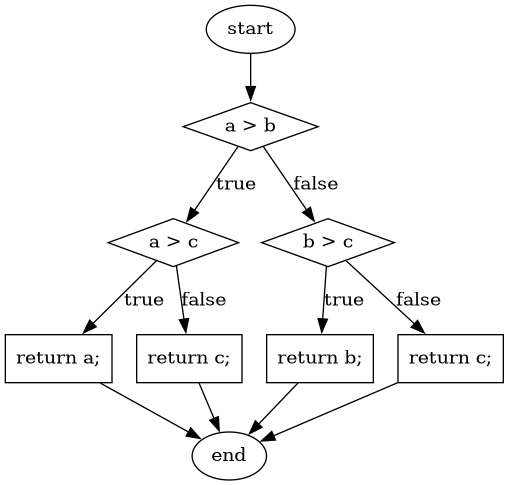

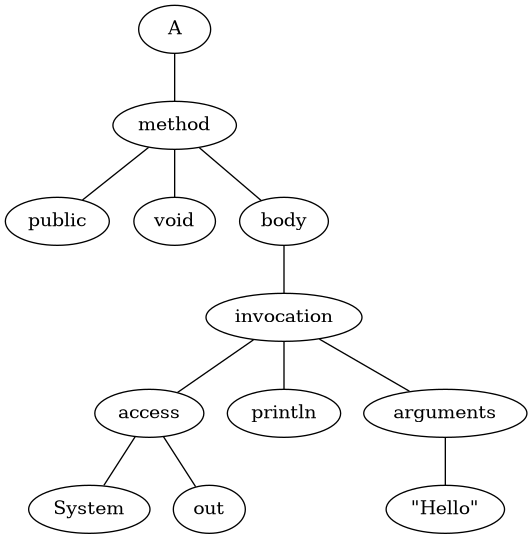

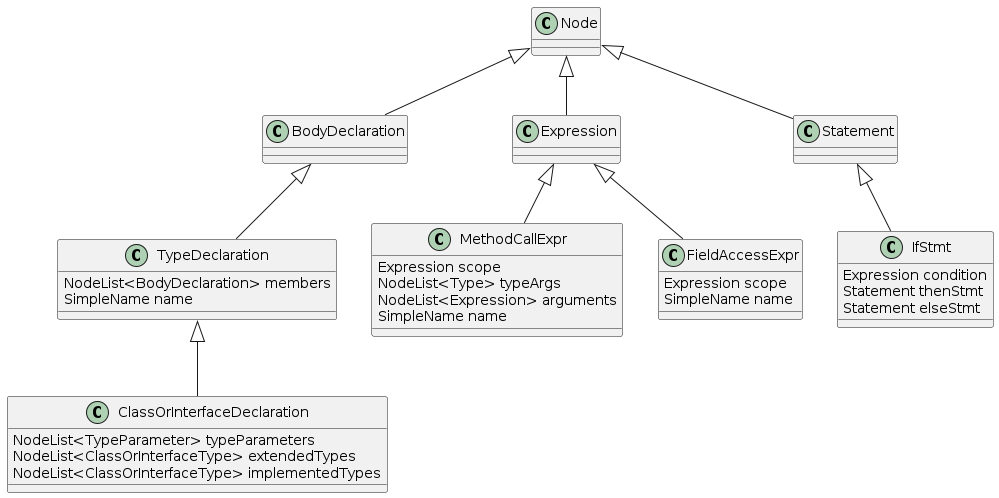

For example, the method in Listing 12 computes the maximum of three given integers. The control flow graph for this method is shown in Figure 7.

public static int max(int a, int b, int c) {

if (a > b) {

if(a > c) {

return a;

}

else {

return c;

}

}

else {

if (b > c) {

return b;

}

else {

return c;

}

}

}

The cyclomatic complexity of a program, represented by its control flow graph, is defined as \$v(G) = E - V + 2P\$, where \$N\$ is the number of nodes, \$E\$ the number of edges and \$P\$ the number of connected components of the underlying undirected graph. In the way we have defined the control flow graph, \$P\$ is always 1. This metric is directly derived from the cyclomatic number or circuit rank of the undirected graph. This property represents the minimum number of edges that has to be removed in order to break all cycles and obtain a spanning tree.

McCabe showed that the computation of the cyclomatic complexity could be simplified as the number of predicate nodes (conditionals) plus one. The method in Listing 12 has a cyclomatic complexity of \$v(G) = 4 = 3 + 1\$, as it has three conditionals: a > b, a > c and b > c. It can be also computed as \$v(G) = 4 = 11 - 9 + 2\$, as it has eleven edges, nine nodes and only one connected component.

McCabe’s cyclomatic complexity is well known and widely used. It is frequently accompanied by a scale. Values below 10 are usually considered as good. However, some caveats of the metrics must be taken into account. First, it was conceived for unstructured programs and some aspects of its original definition are vague. Modern tools implementing the metric work under different assumptions, therefore two different tools may not produce the same result for the same method. Logical conjunctions and disjunctions (&& and || in some programming languages) also produce branches but not all tools include them in their results.

Not always the cyclomatic complexity matches the developer’s idea of what is a complex method. For example, the metric does not consider nested structures. It produces the same value for the two code fragments in Listing 13.

// 1

if (a) {

if (b) {

...

}

else {

...

}

}

else {

}

//2

if(a) {

...

}

else {

}

if (b) {

}

else {

}In (42), the author advocates against the use of the metric. Besides showing concrete examples where tools produce different results, he discusses the method in Listing 14. The author explain that this method is fairly easy to understand, yet it has a cyclomatic complexity of 13 while the more complex method in Listing 15 has a cyclomatic complexity of 5.

String getMonthName (int month) {

switch (month) {

case 0: return "January";

case 1: return "February";

case 2: return "March";

case 3: return "April";

case 4: return "May";

case 5: return "June";

case 6: return "July";

case 7: return "August";

case 8: return "September";

case 9: return "October";

case 10: return "November";

case 11: return "December";

default:

throw new IllegalArgumentException();

}

}int sumOfNonPrimes(int limit) {

int sum = 0;

OUTER: for (int i = 0; i < limit; ++i) {

if (i <= 2) {

continue;

}

for (int j = 2; j < i; ++j) {

if (i % j == 0) {

continue OUTER;

}

}

sum += i;

}

return sum;

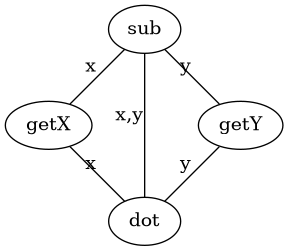

}Coupling between objects or class coupling

A class is coupled to another if the former uses a method or a field from the latter. Coupling between classes can not be avoided, it is, in fact, desirable. We create classes as functional units for reuse. At some point, existing classes will be leveraged to create new functionalities. However, coupling has important implications: changing a class will, most of the times, require changing its dependent classes. Therefore, tight coupling between classes harms modularity and makes a software too sensitive to change and harder to maintain (43) (44).

Class coupling or Coupling Between Objects (CBO) of a class is the number of external classes it uses. In Listing 16, Point has CBO of 0. It only depends on double and the metric does not consider primitive types. Line, on the other hand, depends on Point and has a CBO of 1. The metric counts only unique classes. In the example, Line uses Point several times, but it is counted only once.

Point as CB=0 coupling and Line 1.class Point {

private double x, y;

public Point(double x, double y) {

this.x = x;

this.y = y;

}

public double getX() {

return this.x;

}

public double getY() {

return this.y;

}

public double dot(Point p) {

return x*p.x + y*p.y;

}

public Point sub(Point p) {

return new Point(x - p.x, y - p.y);

}

}

class Segment {

private Point a, b;

public class Segment(Point a, Point b) {

this.a = a;

this.b = b;

}

public boolean has(Point p) {

Point pa = p.sub(a);

Point ab = a.sub(b);

double product = pa.dot(ab);

return 0 <= product && product <= ab.dot(ab);

}

}Classes with low CBO values, or loosely coupled are easier to reuse. Classes with large CBO values or tightly coupled should be avoided and refactored. If a tightly coupled class is necessary, then it requires rigorous testing to correctly verify how it interacts with all its dependencies.

Coupling could be measured not only at the class level but also between any modules at all granularity levels (e.g., packages, components…).

The Law of Demeter (LoD) or principle of least knowledge is a guideline aiming to keep classes loosely coupled (46). Its idea is that any unit should only "talk" to "its closest friends" and not to "strangers". In the context of Object-Oriented Programming, it means that a method can only invoke methods from the receiver (this), a parameter, an object instantiated in the method or an attribute of the class. Listing 17 shows examples of a good use and some violations of this principle.

public class Foo {

public void example(Bar b) {

C c = b.getC(); (1)

c.doIt(); (2)

b.getC().doIt(); (3)

D d = new D();

d.doSomethingElse(); (4)

}

}| 1 | Conforms to LoD |

| 2 | Violates LoD as c was not created inside example |

| 3 | Chaining method invocations does not conform to LoD |

| 4 | Conforms to LoD, as d was created inside the method |

LoD has downsides as well. A strict adherence to its postulates may produce many unnecessary wrapper methods. In Listing 17 the class Bar should had a wrapper method doItInC whose code could be this.getC().doIt() or something alike. This kind of wrapper would be widespread in the code and it could become a challenge for maintenance. On the other hand, fluent APIs encourage the use of method chains, which also tends to improve readability.

Class cohesion

A class in an object-oriented program, or a module in general, is expected to have a responsibility over a single and well defined part of the software’s functionalities. All services/methods of the module/class should be aligned with this responsibility and this responsibility should be entirely encapsulated in the class. This ensures that the module/class is only changed when the requirements concerning the specific responsibility change. Changes to different requirements should not make a single class to change (47) (48). This is known as the The Single Responsibility Principle and it was coined by Robert C. Martin in the late 1990’s. This principle puts the S in the SOLID principles of object-oriented programming.

| The SOLID principles of object-oriented programming are: S: Single Responsibility Principle, O: Open/Closed Principle, L: Liskov’s Substitution Principle, I: Interface Segregation Principle and D: Dependency Inversion Principle. |

If a class violates this principle, then it can probably be divided in two or more classes with different responsibilities. In this case we say that the class lacks cohesion. In a more concrete view, a cohesive class performs different operations on the same set of instance variables (43).

There are several metrics to evaluate cohesion in classes, but most of them are based in the Lack of Cohesion Of Methods (LCOM) (43). This metric is defined as follows:

Let \$C\$ be a class with \$n\$ methods: \$M_1, ..., M_n\$, let \$I_j\$ the set of instance variables used by the method \$M_j\$. Let \$P = { (I_i, I_j) | I_i \cap I_j = \emptyset, i \gt j }\$, that is, the pairs of methods that use disjoint sets of instance variables, and \$Q = { (I_i, I_j) | I_i \cap I_j \ne \emptyset, i \gt j}\$, all pairs of methods using at least one instance variable in common. Then \$\text{LCOM}(C) = |P| - |Q| \text{ if } |P| \gt |Q| \text{ 0} \text{ otherwise}\$.

This means that LCOM is equal to the number of pairs of methods using a disjoint set of instance variables minus the number of pairs of methods using variables in common. If the class has more methods using disjoint sets of instance variables then it is less cohesive. A class is cohesive if its methods use the same variables to compute different things. Low values of LCOM are preferred.

Table 2 shows the set of all instance variables used by each method declared in the Point class shown in Listing 16. Constructors are not used to compute this metric, as their role is to initialize the variables and they virtually access all of them. In this particular example, all methods use the instance variables directly. However, a method could use an instance variable indirectly by invoking other methods. In that case, the variables are also said to be used by the initial method. For example, any new method invoking getX in Point would also use variable x.

| Method | Instance variables |

|---|---|

|

{ |

|

{ |

|

{ |

|

{ |

Table 3 shows the instance variables used un common for all pairs of methods declared in Point. Only getX and getY do not use any variable in common.

getX |

getY |

dot |

|

|---|---|---|---|

|

{ |

{ |

{ |

|

{ |

{ |

|

|

\$\emptyset\$ |

||

Given that we obtain: \$ | P | = | \{ (I_\text{getX},I_\text{getY}) \} | = 1 \$ and: \$ | Q | = | \{ (I_\text{getX},I_\text{sub}), (I_\text{getX},I_\text{dot}), (I_\text{getY},I_\text{sub}), (I_\text{getY},I_\text{dot}), (I_\text{dot},I_\text{sub}) \} | = 4\$ producing: \$ \text{LCOM}(C) = 0 \$ as \$ | P | \lt | Q | \$. Which means that the Point class is cohesive, its carries the responsibility to represent the concept of a two-dimensional point. Only a change in the requirements of this representation will make this class change.

Lack of cohesion implies that a class violates the principle of single functionality and could be split in two different classes. Listing 18 shows the Group class. The only two methods in this class use a disjoint set of fields. compareTo uses weight while draw uses color and name. Computing the metric we get: \$\text{LCOM}(C = |P| - |Q| = 1 - 0 = 1\$.

compareTo and weight could be separated from the rest.class Group {

private int weight;

private String name;

private Color color;

public Group(String name, Color color, int weight) {

this.name = name;

this.color = color;

this.weight = weight;

}

public int compareTo(Group other) {

return weight - other.weight;

}

public void draw() {

Screen.rectangle(color, name);

}

}Tight Class Cohesion (TCC) and Loose Class Cohesion (LCC) are other two well known and used metrics to evaluate the cohesion of a class (49). Both these metrics start by creating a graph from the class. The graph is constructed as follows: Given a class C, each method m declared in the class becomes a node. Given any two methods m and n declared in C we add an edge between m and n if and only if, m and n use at least one instance variable in common. Going back to the definition of LCOM, we add an edge between m and n if \$I_{m,n} \ne \emptyset\$. TCC is defined as the ratio of directly connected pairs of node in the graph to the number or all pairs of nodes. On its side, LCC is the number of pairs of connected (directly or indirectly) nodes to all pairs of node. As before, constructors are not used.

Figure 8 shows the graph that results from the class Point. In this example there are 5 edges or direct connections, and, as there are 4 nodes, then there are 6 node or methods pairs in total. Therefore \$\text{TCC = 5/6 = 0.83\$. On the other hand, all nodes are connected and \$\text{LCC} = 6/6 = 1\$.

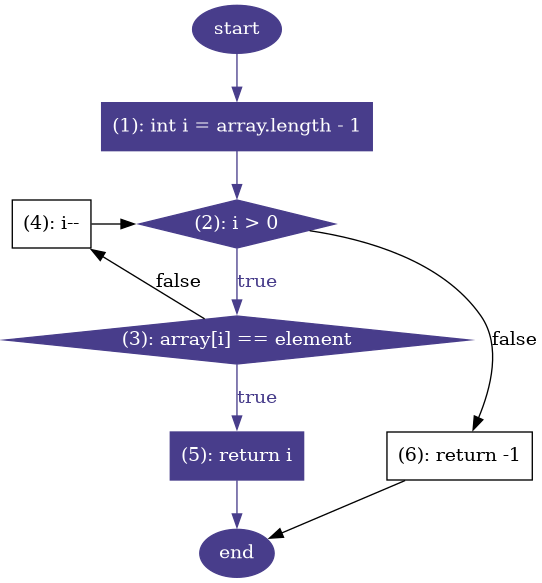

If we do the same for the Group class, we obtain a graph where all nodes are disconnected and both LCC and TCC are 0.